B

bosschop

New Member

- Mar 11, 2023

- 2

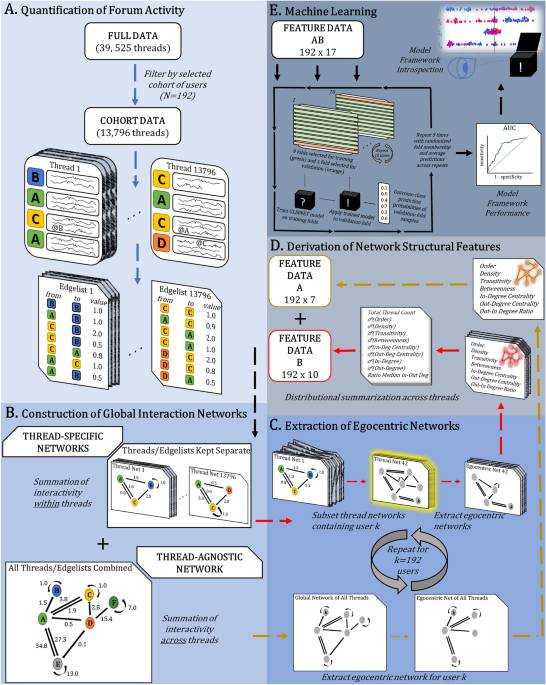

Breaking the silence: leveraging social interaction data to identify high-risk suicide users online using network analysis and machine learning - Scientific Reports

Suicidal thought and behavior (STB) is highly stigmatized and taboo. Prone to censorship, yet pervasive online, STB risk detection may be improved through development of uniquely insightful digital markers. Focusing on Sanctioned Suicide, an online pro-choice suicide forum, this work derived 17...

It's more focused on the technical aspects in terms of the content itself, and considering its an academic paper it's wordy as fuck, but importance lies in data collection and pre-processing.

"Using network data generated from over 3.2 million unique interactions of N = 192 individuals, n = 48 of which were determined to be highest risk users (HRUs), a machine learning classification model was trained, validated, and tested to predict HRU status."

"A complete record of posting activity within the "Suicide Discussion" subforum of Sanctioned Suicide, from inception on March 17, 2018 to February 5, 2021, was programmatically collected and organized as tabular data using a custom Python (v3.8) script that primarily leveraged the BeautifulSoup package to parse the site's HTML and XML information.34 This effort resulted in a dataset containing more than 600,000 time-stamped posts across nearly 40,000 threads and over 11,000 users. This posting activity information consisted of (i) thread title, (ii) thread author, (iii) post author, (iv) post date, (v) post text content, and (vi) direct mentions and references to other user comments within the post text. All information, except for post text, was used in this study. To impose an added layer of user anonymity, each username was automatically assigned a randomly generated, 32-character hashed ID. These de-identifying IDs were automatically replaced with all instances of users' online handles within the data prior to subsequent preprocessing and analysis."

"A structured approach to select a subset of appropriate users and identify HRUs was devised based on the findings discussed through the New York Times investigation into Sanctioned Suicide35 as well as the authors' thorough review of the forum content. Moreover, this strategy was described and utilized in a previously published analysis of users on the Sanctioned Suicide forum.33 To reiterate herein, data was first filtered by searching for thread titles with the following keywords/phrases: "bus is here," "catch the bus," "fare well," "farewell," "final day," "good bye," "goodbye," "leaving," "my time," "my turn," "so long," and "took SN." Of note, "catch the bus" is a euphemism adopted by the community to symbolize suicide,33 while "SN" is short for sodium nitrate, an increasingly popular chemical used in suicide-related methodology. These terms were used to identify "goodbye threads" on Sanctioned Suicide, and thus have the highest probability of signaling for an impending attempt"

In simpler terms, a large amount of data was collected by a university research group, which out of these posts, which where then analysed for words. Not that this is of any interest to me personally but @RainAndSadness, are there counter-measures against these types of stuff or did you have knowledge of this?

They even said: "Accordingly, written informed consent was waived and this study "exempt" from further review."

t